passing functions to functions--Vittorio Romeo

How do you pass functions?

passing functions to functions

by Vittorio Romeo

From the article:

Since the advent of C++11 writing more functional code has become easier. Functional programming patterns and ideas are powerful additions to the C++ developer's huge toolbox. (I recently attended a great introductory talk on them by Phil Nash at the first London C++ Meetup - you can find an older recording here on YouTube.)

In this blog post I'll briefly cover some techniques that can be used to pass functions to other functions and show their impact on the generated assembly at the end...

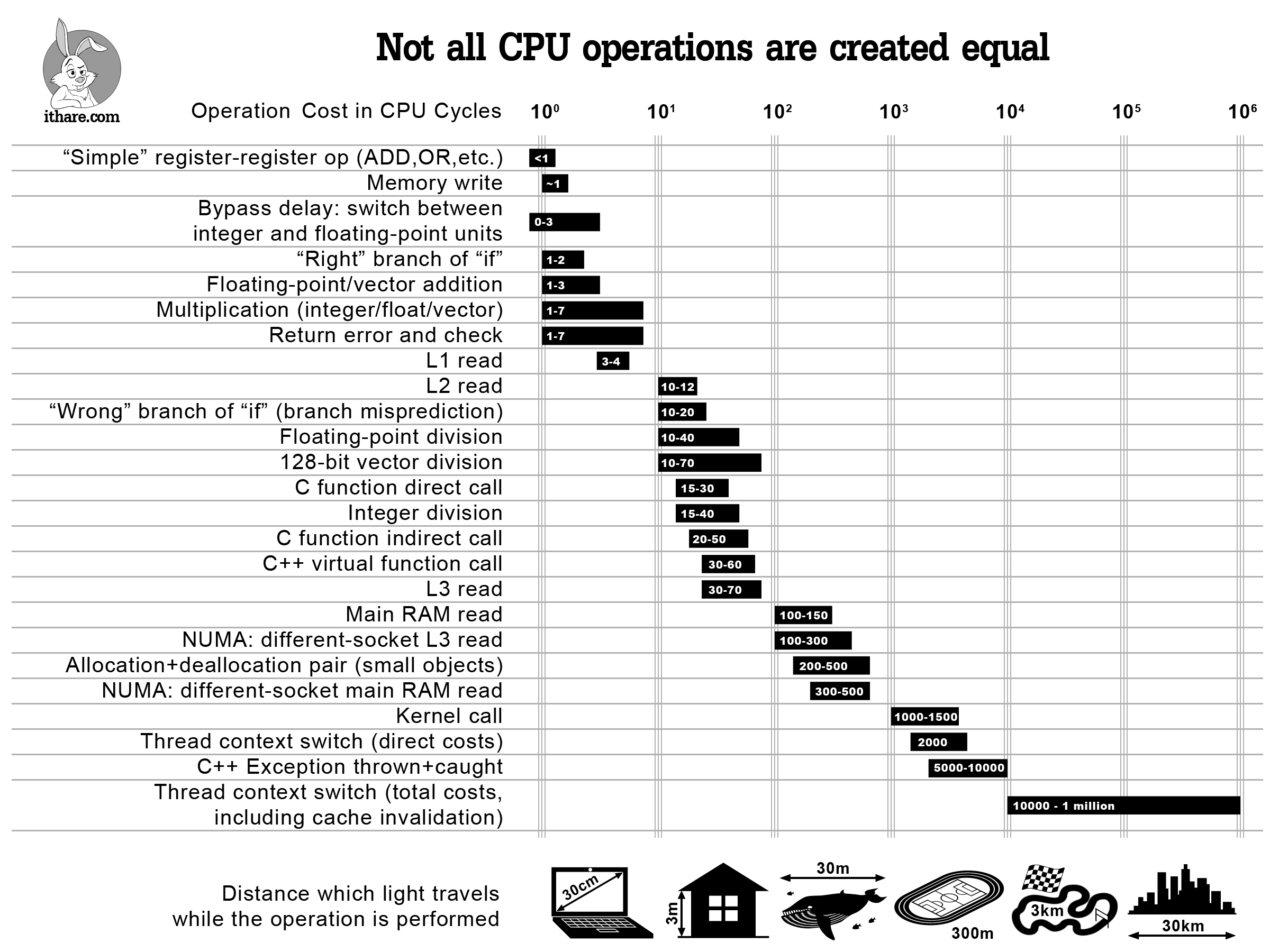

A very interesting article about the cost of our basic operations.

A very interesting article about the cost of our basic operations. The new GoingNative is out!

The new GoingNative is out! Have you registered for CppCon 2016 in September? Don’t delay –

Have you registered for CppCon 2016 in September? Don’t delay –