C++26: constexpr Exceptions -- Sandor Dargo

In recent weeks, we’ve explored language features and library features becoming constexpr in C++26. Those articles weren’t exhaustive — I deliberately left out one major topic: exceptions.

C++26: constexpr Exceptions

by Sandor Dargo

From the article:

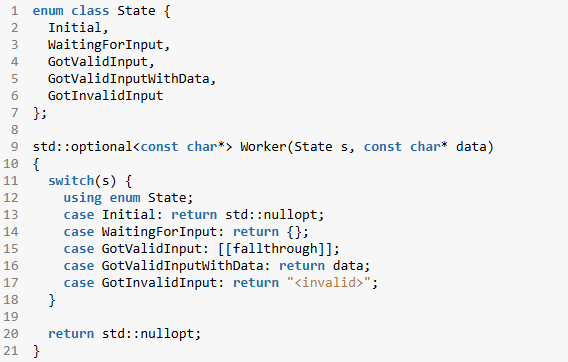

P3068R6: Allowing exception throwing in constant-evaluation

The proposal for static reflection suggested allowing exceptions in constant-evaluated code, and P3068R6 brings that feature to life.

constexprexceptions are conceptually similar toconstexprallocations. Just as aconstexpr stringcan’t escape constant evaluation and reach runtime,constexprexceptions also have to remain within compile-time code.Previously, using

throwin aconstexprcontext caused a compilation error. With C++26, such code can now compile — unless an exception is actually thrown and left uncaught, in which case a compile-time error is still issued. But the error now provides more meaningful diagnostics.

Registration is now open for CppCon 2025! The conference starts on September 15 and will be held

Registration is now open for CppCon 2025! The conference starts on September 15 and will be held  In today's post I share a learning of a customer with you. A while back, a customer asked me to join a debugging session. They had an issue they didn't (fully) understand.

In today's post I share a learning of a customer with you. A while back, a customer asked me to join a debugging session. They had an issue they didn't (fully) understand.

Registration is now open for CppCon 2025! The conference starts on September 15 and will be held

Registration is now open for CppCon 2025! The conference starts on September 15 and will be held  A unique milestone: “Whole new language”

A unique milestone: “Whole new language” Registration is now open for CppCon 2025! The conference starts on September 15 and will be held

Registration is now open for CppCon 2025! The conference starts on September 15 and will be held  Templates are one of C++’s most powerful features, enabling developers to write generic, reusable code—but they come with a cost: notoriously verbose and opaque error messages. With the introduction of concepts in C++20, we can now impose clear constraints on template parameters and get far more helpful diagnostics when something goes wrong.

Templates are one of C++’s most powerful features, enabling developers to write generic, reusable code—but they come with a cost: notoriously verbose and opaque error messages. With the introduction of concepts in C++20, we can now impose clear constraints on template parameters and get far more helpful diagnostics when something goes wrong.