Concurrency Flavours -- Lucian Radu Teodorescu

Concurrency has many different approaches. Lucian Radu Teodorescu clarifies terms, showing how different approaches solve different problems.

Concurrency has many different approaches. Lucian Radu Teodorescu clarifies terms, showing how different approaches solve different problems.

Concurrency Flavours

by Lucian Radu Teodorescu

From the article:

Most engineers today use concurrency – often without a clear understanding of what it is, why it’s needed, or which flavour they’re dealing with. The vocabulary around concurrency is rich but muddled. Terms like parallelism, multithreading, asynchrony, reactive programming, and structured concurrency are regularly conflated – even in technical discussions.

This confusion isn’t just semantic – it leads to real-world consequences. If the goal behind using concurrency is unclear, the result is often poor concurrency – brittle code, wasted resources, or systems that are needlessly hard to reason about. Choosing the right concurrency strategy requires more than knowing a framework or following a pattern – it requires understanding what kind of complexity you’re introducing, and why.

To help clarify this complexity, the article aims to map out some of the main flavours of concurrency. Rather than defining terms rigidly, we’ll explore the motivations behind them – and the distinct mindsets they evoke. While this article includes a few C++ code examples (using features to be added in C++26), its focus is conceptual – distinguishing between the flavours of concurrency. Our goal is to refine the reader’s taste for concurrency.

Value semantics is a way of structuring programs around what values mean, not where objects live, and C++ is explicitly designed to support this model. In a value-semantic design, objects are merely vehicles for communicating state, while identity, address, and physical representation are intentionally irrelevant.

Value semantics is a way of structuring programs around what values mean, not where objects live, and C++ is explicitly designed to support this model. In a value-semantic design, objects are merely vehicles for communicating state, while identity, address, and physical representation are intentionally irrelevant. In today's post, I like to touch on a controversial topic: singletons. While I think it is best to have a codebase without singletons, the real-world shows me that singletons are often part of codebases.

In today's post, I like to touch on a controversial topic: singletons. While I think it is best to have a codebase without singletons, the real-world shows me that singletons are often part of codebases.

Conferences are never just about the talks — they’re about time, travel, tradeoffs, and the people you meet along the way. After a year of attending several C++ events across formats and cities, this post is a personal look at how different conferences balance technical depth, community, and the experience of being there.

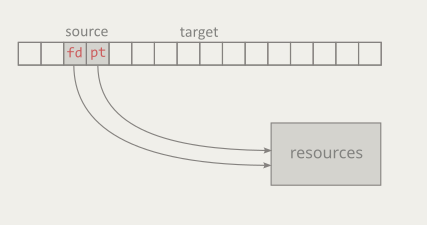

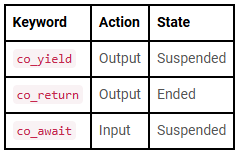

Conferences are never just about the talks — they’re about time, travel, tradeoffs, and the people you meet along the way. After a year of attending several C++ events across formats and cities, this post is a personal look at how different conferences balance technical depth, community, and the experience of being there. C++20 introduced coroutines. Quasar Chunawala, our guest editor for this edition, gives an overview.

C++20 introduced coroutines. Quasar Chunawala, our guest editor for this edition, gives an overview. std::chrono::high_resolution_clock sounds like the obvious choice when you care about precision, but its name hides some important caveats. In this article, we’ll demystify what “high resolution” really means in <chrono>, why this clock is often just an alias, and when—if ever—it’s actually the right tool to use.

std::chrono::high_resolution_clock sounds like the obvious choice when you care about precision, but its name hides some important caveats. In this article, we’ll demystify what “high resolution” really means in <chrono>, why this clock is often just an alias, and when—if ever—it’s actually the right tool to use.