C++ Compiler Benchmarks--Imagine Raytracer

On the blog Imagine Raytracer a few days ago:

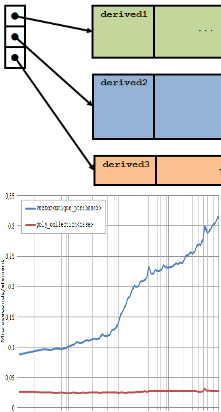

C++ Compiler Benchmarks

by Imagine Raytracer

From the article:

[...] I decided to try 4.9.2 which had just been released. This seemed to showed a fairly serious regression in terms of speed (speed being a pretty important aspect for a renderer), so I decided I'd do a more comprehensive comparison of the latest main compilers for the Linux platform, as back in 2011 and 2012 I used to do compiler benchmarks (GCC, LLVM and ICC) regularly every six months or so on my own code (including Imagine), and on the commercial VFX compositor made by the company I worked for at the time, and it had been a while since I'd compared them myself...

More in the "contiguous enables fast" department:

More in the "contiguous enables fast" department: On the theme of "contiguous enables fast":

On the theme of "contiguous enables fast": Some high-performance techniques that you an use for more than just parsing, including this week's darling of memory management:

Some high-performance techniques that you an use for more than just parsing, including this week's darling of memory management: