Extended Aggregate Initialisation in C++17--Jonathan Boccara

Were you aware of the change?

Extended Aggregate Initialisation in C++17

by Jonathan Boccara

From the article:

By upgrading a compiler to C++17, a certain piece of code that looked reasonable stopped compiling.

This code doesn’t use any deprecated feature such as std::auto_ptr or std::bind1st that were removed in C++ 17, but it stopped compiling nonetheless.

Understanding this compile error will let us better understand a new feature of C++17: extended aggregate initialisation...

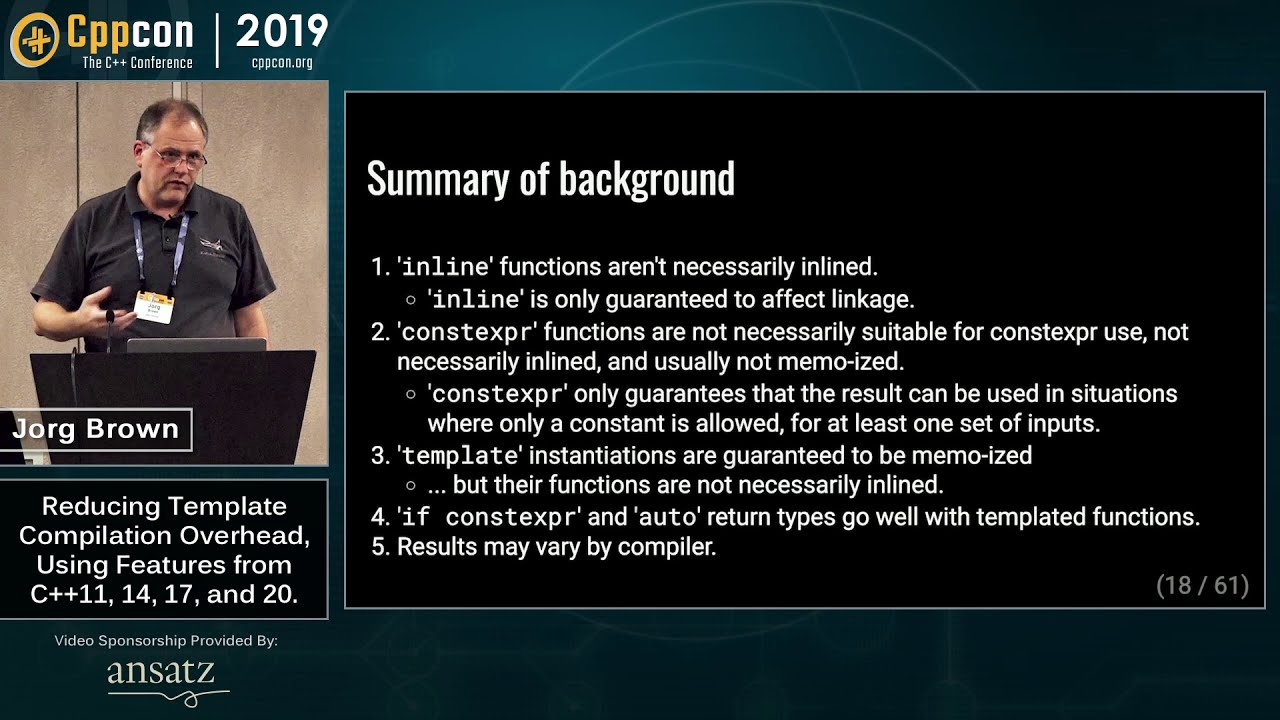

Registration is now open for CppCon 2021, which starts on October 24 and will be held

Registration is now open for CppCon 2021, which starts on October 24 and will be held  Registration is now open for CppCon 2021, which starts on October 24 and will be held

Registration is now open for CppCon 2021, which starts on October 24 and will be held

Will you participate?

Will you participate? Registration is now open for CppCon 2021, which starts on October 24 and will be held

Registration is now open for CppCon 2021, which starts on October 24 and will be held