Meeting C++ is sold out!

Yesterday, the last avaiable ticket for Meeting C++ was sold!

Meeting C++ 2015 is sold out

by Jens Weller

From the article

In the beginning of October I decided to add 50 additional tickets to this years Meeting C++...

March 11-13, Online

March 16-18, Madrid, Spain

March 23-28, Croydon, London, UK

March 30, Kortrijk, Belgium

May 4-8, Aspen, CO, USA

May 4-8, Toronto, Canada

June 8 to 13, Brno, Czechia

June 17-20, Folkestone, UK

September 12-18, Aurora, CO, USA

November 6-8, Berlin, Germany

November 16-21, Búzios, Rio De Janeiro, Brazil

By Meeting C++ | Oct 29, 2015 04:42 AM | Tags: community

Yesterday, the last avaiable ticket for Meeting C++ was sold!

Meeting C++ 2015 is sold out

by Jens Weller

From the article

In the beginning of October I decided to add 50 additional tickets to this years Meeting C++...

By jdgarcia | Oct 24, 2015 04:40 PM | Tags: None

The Spanish-language C++ event using std::cpp 2015 will gather C++ Spanish comunity in a full one day free event.

using std::cpp 2015

November 18, 2015

University Carlos III of Madrid in Leganés

For the 3rd year, University Carlos III of Madrid, hosts using std::cpp, an event for C++ software developers held in Spain. Past editions of using std::cpp have had participations around 200 people each year where 75% were professional software developers and the other 25% where academics and students. The event offers a godd opportunity for the Spanish C++ comunity to gather together and exchange experiences about the language as well as to provide udpdated information about the language.

Some program highlights:

You may access to videos and slides from previous years:

For more information you may contact J. Daniel Garcia.

By Adrien Hamelin | Oct 19, 2015 08:00 AM | Tags: c++14

A call for next CppCon:

A call for next CppCon:

Call for Class Proposals

From the article:

The conference is asking for instructors to submit proposals for classes to be taught in conjunction with next September’s CppCon 2016.

If you are interested in teaching such a class, please contact us at [email protected] and we’ll send you an instructors’ prospectus and address any questions that you might have.

By Felix Petriconi | Oct 8, 2015 11:25 AM | Tags: community

ACCU 2016 is now putting together its program, and they want you to speak on C++. ACCU has long had a strong C++ track, though it is not a C++-only conference. If you have something to share, check out the call for papers.

Call for Papers

ACCU 2016

We invite you to propose a session for this leading software development conference.

The Call for Papers lasts 5 weeks and will close at midnight Friday 2015-11-13. Be finished by Friday the 13th...

By Meeting C++ | Oct 2, 2015 06:54 AM | Tags: user groups community

The monthly overview on upcoming user group meetings:

C++ User Group Meetings in October

by Jens Weller

From the article:

5.10 C++ UG Dublin - C/C++ Meeting with 3 Talks

7.10 C++ UG Saint Louis - Intro to Unity\, Scott Meyers "gotchas"\, Group exercise

7.10 C++ UG Washington, DC - Q & A / Info Sharing

13.10 C++ UG New York - Joint October C++ Meetup with Empire Hacking

14.10 C++ UG Utah - Regular Monthly Meeting

14.10 C++ UG San Francisco/ Bay area - Presentation and Q&A

19.10 C++ UG Austin - North Austin Monthly C/C++ Pub Social

20.10 C++ UG Berlin - Thomas Schaub - Introduction to SIMD

20.10 C++ UG Hamburg - JavaX (really?)

21.10 C++ UG Washington, DC - Q & A / Info Sharing

21.10 C++ UG Bristol - Edward Nutting

21.10 C++ UG Düsseldorf - CppCon trip report & Multimethods

21.10 C++ UG Arhus - Lego & C++

24.10 C++ UG Italy - Clang, Xamarin, MS Bridge, Google V8

28.10 C++ UG San Francisco/ Bay area - Workshop and Discussion Group

29.10 C++ UG Bremen - C++ User Group

By Blog Staff | Sep 24, 2015 11:46 AM | Tags: None

This year the team is trying to get a few of the big talks up early while still busily recording the 100+ others still in progress. Yesterday, they posted Bjarne Stroustrup's opening keynote video less than 48 hours after the live talk, and we're pleased to see that as of this writing over 10,000 of you have already enjoyed it online in its first day!

Today, the team posted the video for Herb Sutter's Day 2 plenary talk, which he described as "part 2 of Bjarne's keynote" with a focus on type and memory safety with live demos. If you couldn't be at CppCon on Tuesday in person, we hope you enjoy it:

Writing Good C++14... by Default (YouTube) (slides)

by Herb Sutter, CppCon 2015 day 2 plenary session

This talk continues from Bjarne Stroustrup’s Monday keynote to describe how the open C++ core guidelines project is the cornerstone of a broader effort to promote modern C++. Using the same cross-platform effort Stroustrup described, this talk shows how to enable programmers write production-quality C++ code that is, among other benefits, type-safe and memory-safe by default -- free of most classes of type errors, bounds errors, and leak/dangling errors -- and still exemplary, efficient, and fully modern C++.

Related:

We hope posting these few highlights while CppCon is still in progress can help to let everyone in the worldwide C++ community share in the news and feel a part of the gathering here in the Seattle neighborhood this week. Even if you couldn't be here in person this year to enjoy the full around-the-clock technical program and festival atmosphere, we hope you enjoy this nugget in the video presentation.

By Blog Staff | Sep 23, 2015 08:50 AM | Tags: None

CppCon is in full swing, and once again all the sessions, panels, and lightning talks are being professionally recorded and will be available online -- about a month after the conference, because it takes time to process over 100 talks!

CppCon is in full swing, and once again all the sessions, panels, and lightning talks are being professionally recorded and will be available online -- about a month after the conference, because it takes time to process over 100 talks!

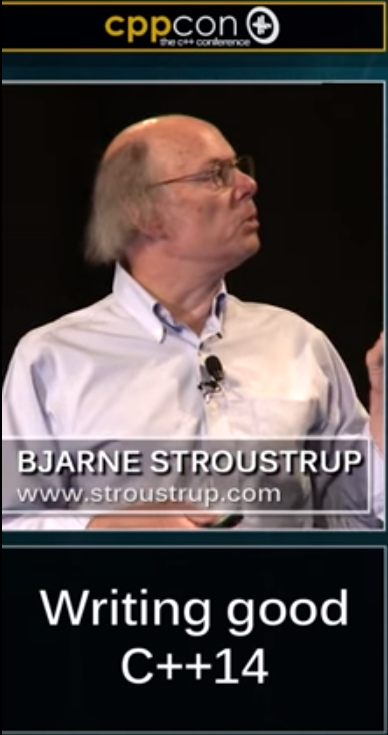

However, because of the importance of Bjarne's open keynote announcements on Monday, the team has pulled out the stops to process his video and get it up on YouTube (and maybe soon also Channel 9 for areas where YouTube is not available). It's there now, so if you couldn't be at CppCon on Monday in person, check it out:

Writing Good C++14 (YouTube) (slides)

by Bjarne Stroustrup, CppCon 2015 opening keynote

The team says that they may also fast-track the other daily keynote/plenary sessions as well, some of which are directly related to Bjarne's keynote. If that happens, we'll post links here too. In the meantime, enjoy Bjarne's groundbreaking talk -- as one CppCon attendee and longtime C++-er said, "this is one of the most exciting weeks for C++ I can remember." We agree.

By Blog Staff | Sep 21, 2015 04:43 PM | Tags: None

The slides from Bjarne Stroustrup's keynote this morning have now been posted at github/isocpp/CppCoreGuidelines under /talks:

The slides from Bjarne Stroustrup's keynote this morning have now been posted at github/isocpp/CppCoreGuidelines under /talks:

Writing Good C++14

by Bjarne Stroustrup

CppCon 2015 keynote talk, Mon Sep 21, 2015

For context, see this morning's announcement.

By Blog Staff | Sep 21, 2015 09:00 AM | Tags: None

This morning in his opening keynote at CppCon, Bjarne Stroustrup announced the C++ Core Guidelines (github.com/isocpp/CppCoreGuidelines), the start of a new open source project on GitHub to build modern authoritative guidelines for writing C++ code. The guidelines are designed to be modern, machine-enforceable wherever possible, and open to contributions and forking so that organizations can easily incorporate them into their own corporate coding guidelines.

The initial primary authors and maintainers are Bjarne Stroustrup and Herb Sutter, and the guidelines so far were developed with contributions from experts at CERN, Microsoft, Morgan Stanley, and several other organizations. The guidelines are currently in a “0.6” state, and contributions are welcome. As Stroustrup said: “We need help!”

Stroustrup said: “You can write C++ programs that are statically type safe and have no resource leaks. You can do that without loss of performance and without limiting C++’s expressive power. This supports the general thesis that garbage collection is neither necessary nor sufficient for quality software. Our core C++ guidelines makes such code simpler to write than older styles of C++ and the safety can be validated by tools that should soon be available as open source.”

From Stroustrup’s talk abstract:

In this talk, I describe a style of guidelines that can be deployed to help most C++ programmers... The rules are prescriptive rather than merely sets of prohibitions, and about much more than code layout... The core guidelines and a guideline support library reference implementation will be open source projects freely available on all major platforms (initially, GCC, Clang, and Microsoft).

Although the repository was not officially announced until today, it was made public last week and was noticed: CppCoreGuidelines was the #1 trending repository worldwide on GitHub on Friday, and is currently the #1 trending repository worldwide for the past week, across all languages and projects.

Stroustrup also announced two other related projects.

Guideline Support Library (GSL): First, the C++ Core Guidelines also specifies a small Guideline Support Library (GSL), a set of common types like array_view and not_null to facilitate following the modern guidelines. An initial open source reference implementation contributed and supported by Microsoft is now available on GitHub at github.com/Microsoft/GSL. It is written in portable C++ that should work on any modern compiler and platform, and has been tested on Clang/LLVM 3.6 and GCC 5.1 for Linux, with Xcode and GCC 5.2.0 for OS X, and with Microsoft Visual C++ 2013 (Update 5) and 2015 for Windows. This is both a supported library and an initial reference implementation; other implementations by other vendors are encouraged, as are forks of and contributions to this implementation.

Checker tool: Second, the C++ Core Guidelines are designed to be machine-enforceable wherever possible, and include many rules that can be checked by a compiler, lint, or other tool. An initial implementation based on Microsoft’s Visual Studio will be demonstrated in several talks at CppCon this week, including Herb Sutter’s Day 2 plenary session tomorrow morning. This implementation will be made available as a Windows binary in October, with the intention to open source the implementation thereafter. This too will become a supported tool and an initial reference implementation open to others; other implementations by other vendors of compilers, linters, and other tools are encouraged.

A number of other CppCon talks will go deeper into the related topics, notably the following talks by speakers who collaborated on the Guidelines effort:

Herb Sutter: Writing Good C++14 by Default (Tue 10:30am)

Gabriel Dos Reis: Large Scale C++ with Modules: What You Should Know (Tue 2:00pm)

Neil MacIntosh: More Than Lint: Modern Static Analysis for C++ (Wed 2pm)

Neil MacIntosh: A Few Good Types: Evolving array_view and string_view for Safe C++ Code (Wed 3:15pm)

Gabriel Dos Reis: Contracts for Dependable C++ (Wed 4:45pm)

Eric Niebler: Ranges and the Future of the STL (Fri 10:30am)

(and more)

If you’re at CppCon this week, watch for those talks. If you aren’t, like last year’s event, CppCon 2015 is again professionally recording all talks, and they will be freely available online about a month after the conference.

By Blog Staff | Sep 21, 2015 06:28 AM | Tags: None

CppCon 2015 starts in two hours:

The Visual C++ team is at CppCon 2015

by Eric Battalio

From the article:

Steve Carroll and Ayman Shoukry cover what's new in VC++ early on Thursday morning. Ankit Asthana and Marc Gregoire will update us on the [Clang-based] support for cross-platform mobile development in VS 2015... James Radigan will talk about the work that we're doing to connect the Clang front end to the Microsoft optimizing back end [and] how we're working with the community to make this possible... Gabriel Dos Reis will talk about C++ Modules, a new design that helps with componentization, isolation from macros, scalable build, and support for modern semantics-aware developer tools. ...

Stephan T. Lavavej will discuss developments in <functional> from C++11 to C++17. We'll hear about contracts from Gabriel Dos Reis, and ... Artur Laksberg goes into the details of the Concurrency TS while Gor Nishanov describes the exciting future of concurrency in C++: coroutines, an abstraction that makes your code both simpler and execute faster. ...

You might have noticed a new repo that the Standard C++ Foundation published last week: the C++ Core Guidelines... Bjarne will talk about this in his keynote on Monday morning, and Herb Sutter will continue the discussion with his talk on Tuesday. And we've not only been working on the guidelines: we've implemented a library to support the guidelines and enforcement tools that automatically verify that your code follows them. Neil MacIntosh will discuss both of these projects in his back-to-back talks on Wednesday afternoon. The C++ Core Guidelines are a huge step forward for the language and the Visual C++ team is excited to be part of the group working on bringing them to reality!