Trip report: Meeting C++ 2025 -- Sandor Dargo

Trip report: Meeting C++ 2025

by Sandor Dargo

From the article:

I remember that last year I said that Berlin in November is the perfect place to have a conference because you want to be inside that four-star hotel and not outside. This year, the conference was held a week earlier, and the weather was so nice that it was actually tempting to go out and explore.

But people resisted the temptation. The lineup and content were very strong — this year there were more than 50 talks across 5 different tracks. Also, Meeting C++ is a fully hybrid conference, so you can join any talk online as well.

It might sound funny, but I must mention that the food is just great at Meeting C++. It’s probably the conference with the best catering I’ve ever been to — from lunch to coffee breaks, everything was top-notch.

This year, there were no evening programs. I’m not complaining; it’s both a pity and a blessing, and I’m not sure how I feel about it. For example, when I first attended C++ On Sea, there were no evening events, and I really enjoyed discovering Folkestone in the evenings. Over the years, the schedule there got extended, and sometimes I had no time to visit my favorite places. But at least some socializing was guaranteed. One can say that you can do it on your own, but many of us are introverted, and if we’re not forced to socialize, we just won’t. That’s even easier to avoid in a big city like Berlin. I remember that last year I didn’t have time to go out until the end of the conference. It was different this year.

But let’s talk about the talks.

My three favourite talks

Let me share with you the three talks I liked the most. They are listed in chronological order...

C++11 gave us

C++11 gave us  The Budapest C++ Meetup was a great reminder of how strong and curious our local community is. Each talk approached the language from a different angle — Jonathan Müller from the perspective of performance, mine from design and type safety, and Marcell Juhász from security — yet all shared the same core message: understand what C++ gives you and use it wisely.

The Budapest C++ Meetup was a great reminder of how strong and curious our local community is. Each talk approached the language from a different angle — Jonathan Müller from the perspective of performance, mine from design and type safety, and Marcell Juhász from security — yet all shared the same core message: understand what C++ gives you and use it wisely. When working with legacy or rigid codebases, performance bottlenecks can emerge from designs you can’t easily change—like interfaces that force inefficient map access by index. This article explores how a simple

When working with legacy or rigid codebases, performance bottlenecks can emerge from designs you can’t easily change—like interfaces that force inefficient map access by index. This article explores how a simple  A missing

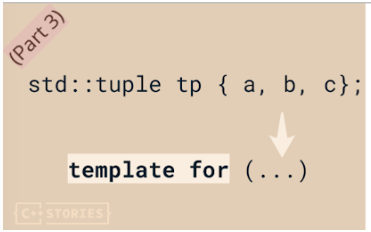

A missing  In this final part of the tuple-iteration mini-series, we move beyond C++20 and C++23 techniques to explore how C++26 finally brings first-class language support for compile-time iteration. With structured binding packs (P1061) and expansion statements (P1306), what once required clever template tricks can now be written in clean, expressive, modern C++.

In this final part of the tuple-iteration mini-series, we move beyond C++20 and C++23 techniques to explore how C++26 finally brings first-class language support for compile-time iteration. With structured binding packs (P1061) and expansion statements (P1306), what once required clever template tricks can now be written in clean, expressive, modern C++. std::format allows us to format values quickly and safely. Spencer Collyer demonstrates how to provide formatting for a simple user-defined class.

std::format allows us to format values quickly and safely. Spencer Collyer demonstrates how to provide formatting for a simple user-defined class. Modern C++ offers elegant abstractions like std::ranges that promise cleaner, more expressive code without sacrificing speed. Yet, as with many abstractions, real-world performance can tell a more nuanced story—one that every engineer should verify through careful benchmarking.

Modern C++ offers elegant abstractions like std::ranges that promise cleaner, more expressive code without sacrificing speed. Yet, as with many abstractions, real-world performance can tell a more nuanced story—one that every engineer should verify through careful benchmarking.