SObjectizer Tales – 23. Mutable messages--Marco Arena

A new episode of the series about SObjectizer and message passing:

A new episode of the series about SObjectizer and message passing:

SObjectizer Tales – 23. Mutable messages

by Marco Arena

From the article:

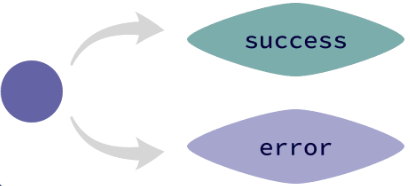

In this episode we explore mutable messages, a feature enabling us to exchange messages that can be modified by the receiver.

In this post, we are going to review two changes related to allocators in C++. One is about providing size information about the allocated memory and the other is about how CTAD should happen for containers with non-default allocators.

In this post, we are going to review two changes related to allocators in C++. One is about providing size information about the allocated memory and the other is about how CTAD should happen for containers with non-default allocators. It can be hard to follow code using enable_if. Andreas Fertig gives a practical example where C++20’s concepts can be used instead.

It can be hard to follow code using enable_if. Andreas Fertig gives a practical example where C++20’s concepts can be used instead. Sometimes the small changes between two C++ standards really bite you. Today's post is about when I got bitten by a change to aggregates in C++20.

Sometimes the small changes between two C++ standards really bite you. Today's post is about when I got bitten by a change to aggregates in C++20. In this article, we’ll go through a new vocabulary type introduced in C++23.

In this article, we’ll go through a new vocabulary type introduced in C++23.  A new episode of the series about SObjectizer and message passing:

A new episode of the series about SObjectizer and message passing: A new episode of the series about SObjectizer and message passing:

A new episode of the series about SObjectizer and message passing: