Writing min function, part 4: Const-Correctness -- Fernando Pelliccioni

Writing min function, part 4: Const-Correctness

by Fernando Pelliccioni

From the Article:

This is the fourth article of the series called “Writing min function”.

I still have to solve two mistakes made in the code of the previous posts. One of them is C++ specific, and the other it is a mistake that could be made in any programming language.

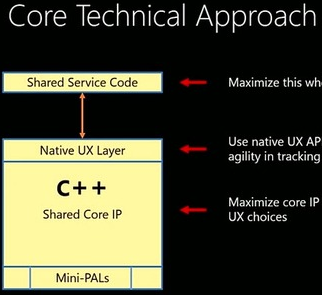

Modern C++, modern apps:

Modern C++, modern apps:

At CppCon last month, InformIT recorded this video interview. It has now been posted:

At CppCon last month, InformIT recorded this video interview. It has now been posted: