Modern Asynchronous Request/Reply with DDS-RPC -- Sumant Tambe

future.then extension and the coroutines proposal:

Modern Asynchronous Request/Reply with DDS-RPC

by Sumant Tambe

From the article:

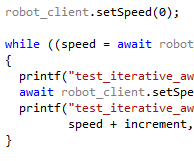

A quick look into N4402 reveals that the await feature relies on composable futures, especially

.then(serial composition) combinator. The compiler does all the magic of transforming the asynchronous code to a state machine that manages suspension and resumption automatically. It is a great example of how compiler and libraries can work together producing a beautiful symphony.... Indeed, this is truly an exciting time to be a C++ programmer.

You can now easily verify if Clang can compile your code on Windows:

You can now easily verify if Clang can compile your code on Windows: Coding well in C++ is becoming easier:

Coding well in C++ is becoming easier: