IIFE for Complex Initialization -- Bartlomiej Filipek

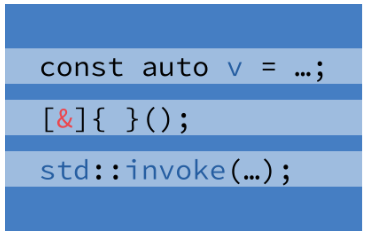

What do you do when the code for a variable initialization is complicated? Do you move it to another method or write inside the current scope? Bartlomiej Filipek presents a trick that allows computing a value for a variable, even a const variable, with a compact notation.

What do you do when the code for a variable initialization is complicated? Do you move it to another method or write inside the current scope? Bartlomiej Filipek presents a trick that allows computing a value for a variable, even a const variable, with a compact notation.

IIFE for Complex Initialization

by Bartlomiej Filipek

In this article:

I hope you’re initializing most variables as

const(so that the code is more explicit, and also compiler can reason better about the code and optimize).For example, it’s easy to write:

const int myParam = inputParam * 10 + 5;or even:

const int myParam = bCondition ? inputParam*2 : inputParam + 10;But what about complex expressions? When we have to use several lines of code, or when the

?operator is not sufficient.‘It’s easy’ you say: you can wrap that initialization into a separate function.

While that’s the right answer in most cases, I’ve noticed that in reality a lot of people write code in the current scope. That forces you to stop using

constand code is a bit uglier.

Filtering items from a container is a common situation. Bartłomiej Filipek demonstrates various approaches from different versions of C++.

Filtering items from a container is a common situation. Bartłomiej Filipek demonstrates various approaches from different versions of C++. Value semantics is a way of structuring programs around what values mean, not where objects live, and C++ is explicitly designed to support this model. In a value-semantic design, objects are merely vehicles for communicating state, while identity, address, and physical representation are intentionally irrelevant.

Value semantics is a way of structuring programs around what values mean, not where objects live, and C++ is explicitly designed to support this model. In a value-semantic design, objects are merely vehicles for communicating state, while identity, address, and physical representation are intentionally irrelevant. In today's post, I like to touch on a controversial topic: singletons. While I think it is best to have a codebase without singletons, the real-world shows me that singletons are often part of codebases.

In today's post, I like to touch on a controversial topic: singletons. While I think it is best to have a codebase without singletons, the real-world shows me that singletons are often part of codebases.