Registration is now open for CppCon 2021, which starts on October 24 and will be held both in person and online. To whet your appetite for this year’s conference, we’re posting videos of some of the top-rated talks from our most recent in-person conference in 2019 and our online conference in 2020. Here’s another CppCon talk video we hope you will enjoy – and why not register today for CppCon 2021 to attend in person, online, or both!

Registration is now open for CppCon 2021, which starts on October 24 and will be held both in person and online. To whet your appetite for this year’s conference, we’re posting videos of some of the top-rated talks from our most recent in-person conference in 2019 and our online conference in 2020. Here’s another CppCon talk video we hope you will enjoy – and why not register today for CppCon 2021 to attend in person, online, or both!

Abusing Your Memory Model for Fun and Profit

by Samy Al Bahra, Paul Khuong

Summary of the talk:

The most efficient concurrent C++ data structures used in the wild today usually achieve break-neck performance by either constraining their workload or constraining correctness to a particular memory model. The audience will learn about the Wild West of abusing memory models for performance and simplification, through real world examples. Non-blocking data structures and their benefits often come at the cost of increased latency because they require additional complexity in the common case. There are plenty of exceptions to this if the requirements of the data structure are relaxed, such as supporting only a bounded level of write or read concurrency or if correctness is constrained to a particular memory model. For this reason, well-designed specialized non-blocking data structures guarantee improved resiliency, throughput and latency in all cases compared to alternatives relying on traditional concurrency primitives. Specialized concurrent structures are common place in the Linux kernel and other performance critical systems.

You will learn about foundational concepts to understanding your underlying hardware's memory model and abusing memory models for fun and profit:

* Cache coherency

* Store Buffers

* Pipelines and speculative executionThis talk provides real-world examples that exploit the x86-TSO model to their advantage:

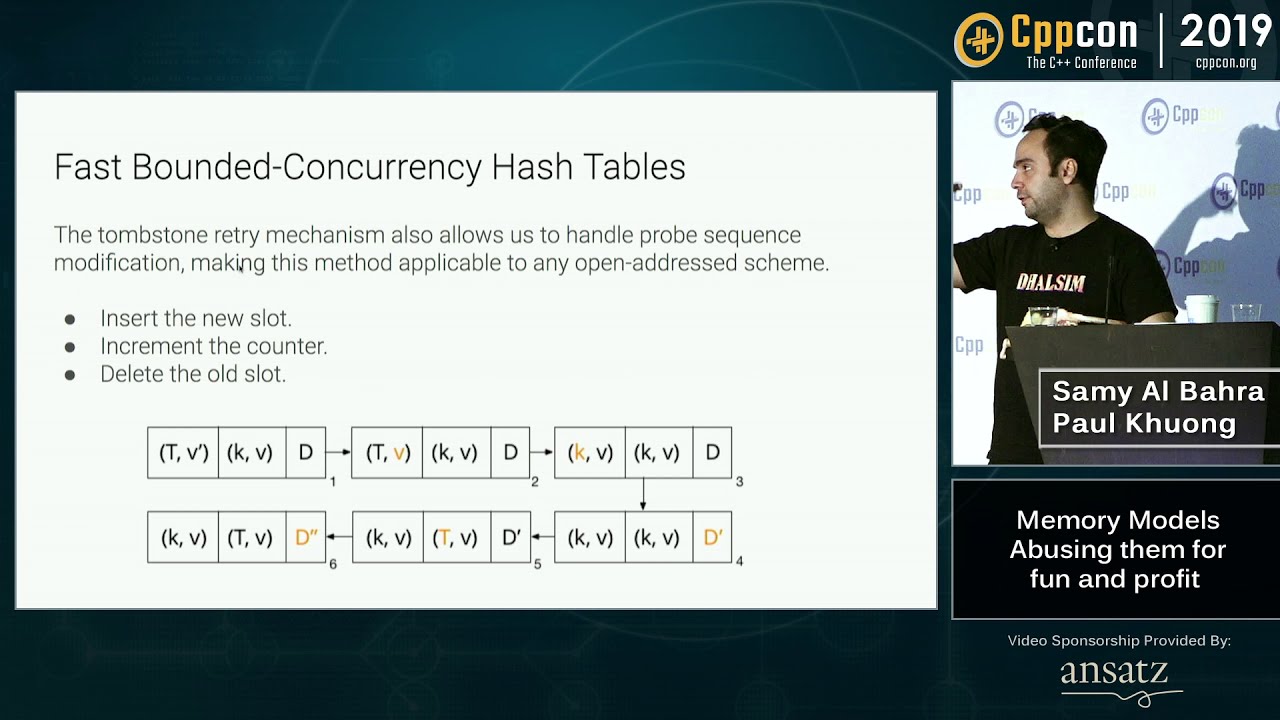

* A general technique to turn literally, any, open-addressed hash table into a concurrent hash table with low to negligible (near 0) cost. The transformation makes your hash table wait-free for writers and mostly wait-free for readers (lock-free in hypothetical worse cases) and is practical for languages such as C++. The mechanism is superior to the previously popular Azure lock-free hash table and even more importantly, practical for any non-garbage-collected environment. The overhead is negligible on TSO and low on non-TSO.

* Blazingly fast event counters. An extremely efficient replacement for condition variables is introduced and faster than any other alternative. This is implemented without requiring any heavy-weight atomic operations on the fast path by exploiting properties of the x86-TSO model.

* Scalable memory management: Exploit the ordering and visibility constraints of the underlying architecture for blazingly fast implementations of RCU and other safe memory reclamation schemes.

* and more.

Add a Comment

Comments are closed.